- Saturday, May 12, 2018

- 13:00 to 18:00

- Alibaba Office

- 525 Almanor Ave 4th Floor, Sunnyvale

Hello, Infrastructure Engineer!

Welcome to the very first event of the Bay Area Cluster Managment Meetup. Our goal is to share technical insights in this area, and get engineers connected.

We are going to hold a series of activities in Alibaba's new office in Sunnyvale, and looking forward to your warm participation. If you are interested, please click the link below to register for the exciting activities.

If you are interested in sharing your experiences – either as speaker or as user – kindly contact us: [email protected]

__Sign up now: __https://www.meetup.com/Alibaba-AIOps-Meetup/events/250165871/?_xtd=gqFyqTI0MjQ4Mzk4MqFwo3dlYg&from=ref

More details: You're Invited! Join the Bay Area Scheduler & Container Meetup

Speakers

- Yu Ding Sr. Staff Software Engineer at Alibaba Group / Tech Lleader of the Cluster Management / Scheduling Team

- Xiang Li Sr. Staff Software Engineer at Alibaba Group

- Yi Wang Senior Scientist at Baidu AI Platform and Tech Lead of PaddlePaddle

- __Liping Zhang __Principal Engineer at Alibaba Group and the chief architect of Alibaba scheduling / cluster management system

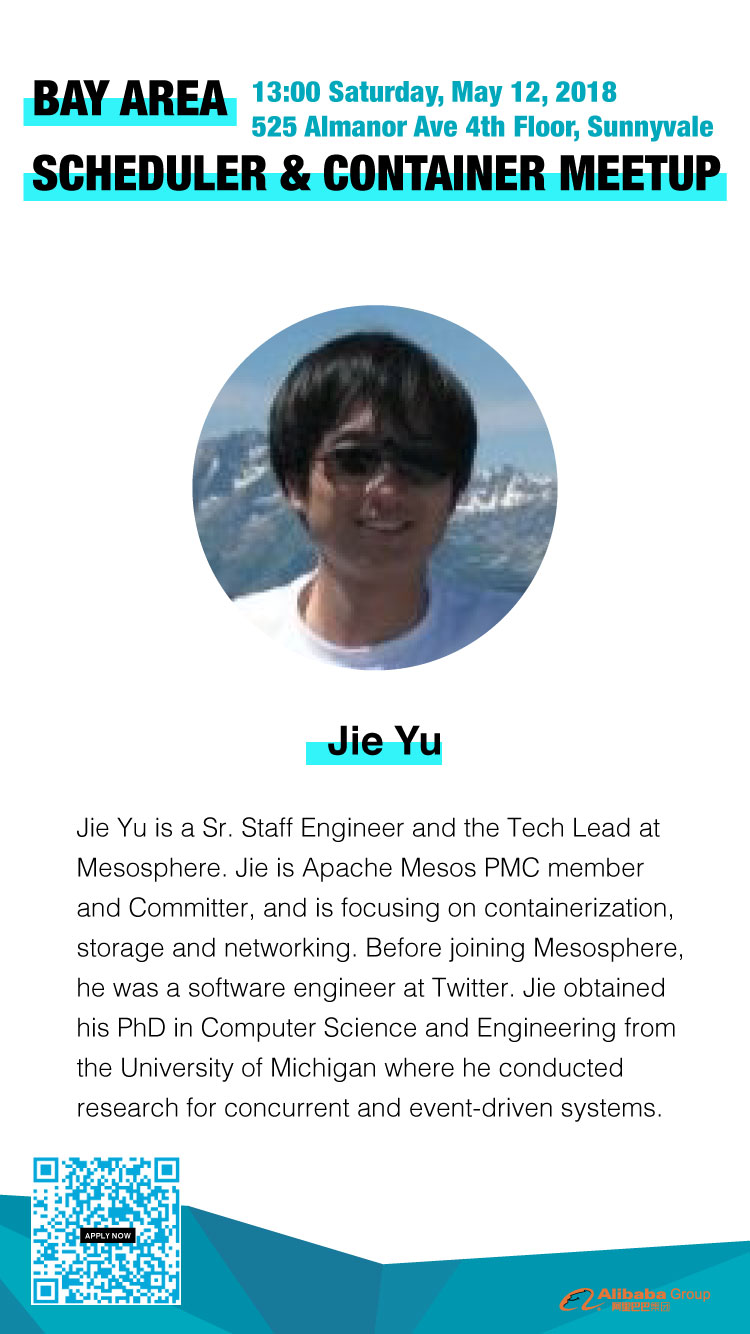

- __Jie Yu __Sr. Staff Engineer and the Tech Lead at Mesosphere

- __Haiying Wang __Tech Leader of the Cluster Management Team at LinkedIn

Agenda

- 13:30 - 14:00 Check In

- 14:00 - 14:50 The Challenges and Possibilities for Alibaba Cluster Management System

- 14:50 - 15:40 PaddlePaddle Fluid: Elastic Deep Learning on Kubernetes

- 15:40 - 16:00 Coffee Break & Speed Networking

- 16:00 - 16:50 The engine of Sigma: the Sigma scheduler

- 16:50 - 18:00 Panel Discussion

Talks

1

The Challenges and Possibilities for Alibaba Cluster Management System

Sigma cluster management is the core infrastructure of Alibaba that manages most online services. Through our in-house developed PouchContainer technology, Sigma forms the basis for the goal of managing the computers of Alibaba data centers as one computer. In this talk, we will introduce the goal and positioning of Alibaba cluster management system and business scenarios. We will also share the problems we have solved, the insights of our architecture design, as well as the challenges and opportunities we face and our future plans for the Alibaba cluster management.

2

PaddlePaddle Fluid: Elastic Deep Learning on Kubernetes

Industrial deep learning requires significant computation power. Traditional management systems like SLURM, MPI, and SGE do not support elastic scheduling. A job that requires 100 nodes and submitted to a cluster with 99 idle nodes would have to wait for a long time and the cluster suffers from a low utilization. PaddlePaddle EDL introduces a scheduler that implements elastic scheduling. Our scheduler considers prioritization so it can elastically schedule all kinds of jobs, e.g., web server, log collector, data processor, and deep learning, running on a general-purpose cluster, and builds a highly efficient data pipeline. The third part of our work is to make PaddlePaddle supports fault-tolerant distributed training so that killing or starting processes of a training job doesn't stop it. On a bare-metal cluster shared with the academia, we observed ~91% of general utilization, which is times higher than the average number of 18% observed from MPI and SLURM clusters.

3

The engine of Sigma: the Sigma scheduler

The sigma scheduler is a policy-rich, micro-topology-aware, workload-specific control plane component that places workload to the nodes. The scheduler needs to take into account individual and collective resource requirements, quality of service requirements, hardware/software/policy constraints, anti-affinity specifications, data locality, workload interference, and so on. The quality of the scheduler significantly impacts the overall cluster performance and utilization. In this talk, we will present the overall design principle of the sigma scheduler and its architecture. We will also explore some of the interesting functionalities that are designed to handle large scale low latency workload.

Speakers